Become a leader in the IoT community!

Join our community of embedded and IoT practitioners to contribute experience, learn new skills and collaborate with other developers with complementary skillsets.

Join our community of embedded and IoT practitioners to contribute experience, learn new skills and collaborate with other developers with complementary skillsets.

Hey @.araki_ we need some help from you please, we are also in the same boat you were. We are trying to get arm firmware built using bazel and we can’t seem to get that going @undefined2001 is working on this now, could we get some help from you pleae

I didn’t use bazel. Since I’m using cube ide, I used this generation script: https://github.com/tensorflow/tflite-micro/blob/main/tensorflow/lite/micro/docs/new_platform_support.md#step-1-build-tflm-static-library-with-reference-kernels

It ouputs a directory that I included in my project and added necessary directories as “includes” folder in build. I still needed to paste further directories from the tflite-micro projects itself as I’m getting error about specific header files being not found when building

But once I successfully integrated it into my project, I’m getting a lot of errors from the tensorflow code itself. So I temporarily kept my project aside for now. I’m away without my laptop now, but I can answer any questions.

I saw that

after adding tflite with X-Cube-AI my project won’t even build

so I left the cubeide

now trying some other `Baaazeeel` way

Have you tried building the examples?

https://github.com/tensorflow/tflite-micro/tree/main/tensorflow/lite/micro/examples/hello_world

I didn’t use X-cube-AI. I tried to just integrate the whole micro directory into my build.

For me, it seems like they want us to follow their own directory tree and build system instead of using it as a library in another project

Ah so Bazel is the way

we can change it most probably but we have to change it quite a lot

I would say most probably I am at the last stage of success

one more error fixed and I am good to go

and such his epitaph read

see my toolchain is now changed

bad news i was unable to handle this include

I tried many ways but sadly it didn’t work

I hope it will work by tomorrow morning

don’t be weary, issues are finite

here how I did it for the stm32f7xx_it.h https://github.com/daleonpz/cmake-template/tree/example/hello_world_stm32f746G_disc/src

here the *.cmake for the toolchain https://github.com/daleonpz/cmake-buildsystem/blob/04d2c37d2d9c83212f4a579c42fdad34b815c2a4/toolchains/cross/cortex-m7_hardfloat.cmake

with cmake we have no issue man

but we have to use bazel

Hmmm @undefined2001 try finding a guide to migrate from cmake to Bazel

maybe https://github.com/bazelembedded/bazel-embedded

@superbike_z , did you try to convert the model to c array

the model is converted its running the inference thats an issue

@undefined2001 I *think* I got it working.

Run `make -f tensorflow/lite/micro/tools/make/Makefile TARGET=cortex_m_generic TARGET_ARCH=cortex-m4+fp microlite` to create a `libtensorflow-microlite.a` library file.

Then add it to your stm project and add it as a library to link in build settings. Include the necessary header files as well.

I just made an inference with their hello world example. I keep getting output 52, which I don’t know is correct or not but atleast it is working.

let me look into it

bazel is killing me man

trying it since last week

non stop everyday

@.araki_ how you have tried to implement in your project

can you please share your github?

I’m not making any actual project at the moment. I’m just learning and wanted to get TFLM working inside an STM generated project. Here’s a gist on steps I followed:

https://gist.github.com/arkreddy21/427a97d4cd1431ebc766dd70b5dc8104

I just solved many errors that happened while tring to make it work. So ask me if there is any specific issue

I am getting error related to flatbuffers

include headers

add core/Inc/third_party/flatbuffers/include to the includes directory in build settings

there is nothing in it

include is empty

I compiled the library

now after that I have to use that i guess in my project

I have a `libtensorflow-microlite.a`

you also have to generate a file structure and copy it to your project, once see my gist

this portion?

yep

I want to use my custom model what should I do?

any idea

This just sets up everything. To run inference, first convert your model into C array. Then you need to invoke it. I added an example main file to the gist, once refer it.

You can also look at the generated examples in /tflm-tree

I did it to an extent but have you used error reporther?

in my case error reporter is misisng

I did not use error reporter but it seems to be present in tflm-tree.

Also, be sure to include all the Operations your model needs in the OpResolver. And allocate enough arena size

my bad

in my case it is also there

just the path was a bit different

let me fix it too

thanks for all the help

I will write a detailed guide since I am not doing it with CubeIDE

@.araki_ here comes the nightmare

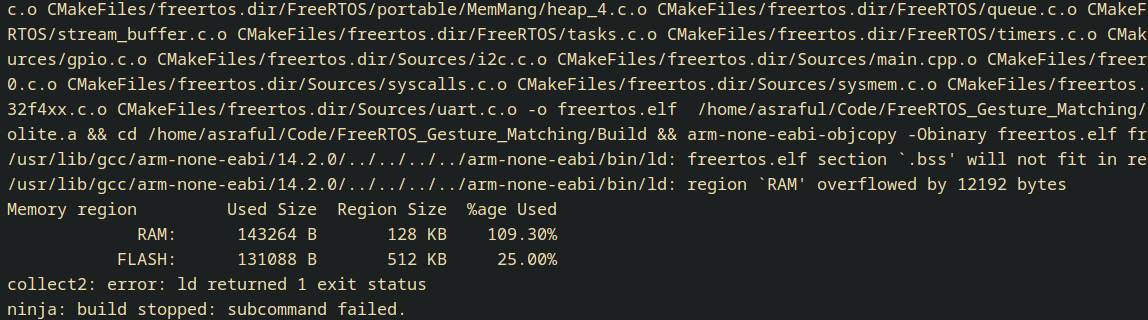

Ah, I guess rtos and tflm is too much. You’re doing release build right, What board/mcu are you using?

I have only 128KB ram

I think too much

I wonder what optimization you will employ now

I can make release but that won’t do much

`Os`

Idk about your build system but stm cube gave me 10x less size on release. Also STM32F407 has 128kb ram + additional 64kb core coupled ram, which could be used with some tinkering I think.

I have 128KB ram

using stm32f446re

no extra ram

I am using optimization though

but the issue is with my data sample’s array and also the model

model was approx 58kb

Well, that itself takes half the ram. Maybe you need to retrain smaller network, with quantization and all

also rtos taking some memory itself

Its just 9% over, so I think you could optimize it

well whole firmware is not complete

I haven’t created the task and assigned them stack

I was just trying to do a test compile

Oh. I don’t really know about your project so can’t help much. I don’t have experience with rtos either

yeah but now I am happy atlease I added `tflite-micro` in it

Yeah that’s good. I’m still learning. I’m taking a tinyml course and wanted to use tflite-micro on my board. There’s still a long way before I can make any decent project

same here too

maybe I should move to some nucleo 144 board

that have approximately 1MB+ ram

Yeah, a bigger mcu is best when tinkering. You can focus on functionality first and optimization later

So what is happening, did you minimize enough and get inference running.

nope it seems there is not much to minimize the code I was compiling it was without assigning stack to each task

so there are more code coming

also, I haved enabled the grabage collection of linker

that also didn’t help much

ah so you need to optimize you stack sizes for the tasks? so a freertos issue not a ML issue

no no what I am saying is I haven’t even assigned memory for each task before that it already overflowing

Ah then where is your memory going?

58kb for the model

and the rest to freeRTOS

normal freeRTOS use to consume around 50kb of my ram and the rest increament wasn’t proportional

how much RAM is on the st you are using

128KByte

so your tasks are taking 70kb?

I’m a fool

My stack

NO no I didn’t say that, I am asking it this is indeed the case

I was using default configuration of freeRTOS

and that assigned 75kb for heap

nuance is key

Take a look at this @undefined2001 , just found out about this.

https://blog.st.com/stm32n6/

https://www.mouser.in/ProductDetail/STMicroelectronics/NUCLEO-N657X0-Q?qs=%252BXxaIXUDbq1Ro77qvzSZDA%3D%3D

it’s an interesting MCU but let’s see when we can use it

CONTRIBUTE TO THIS THREAD